MCU: An Evaluation Framework for Open-Ended Game Agents

ICML · 2025 · arXiv: arxiv.org/abs/2310.08367

Abstract

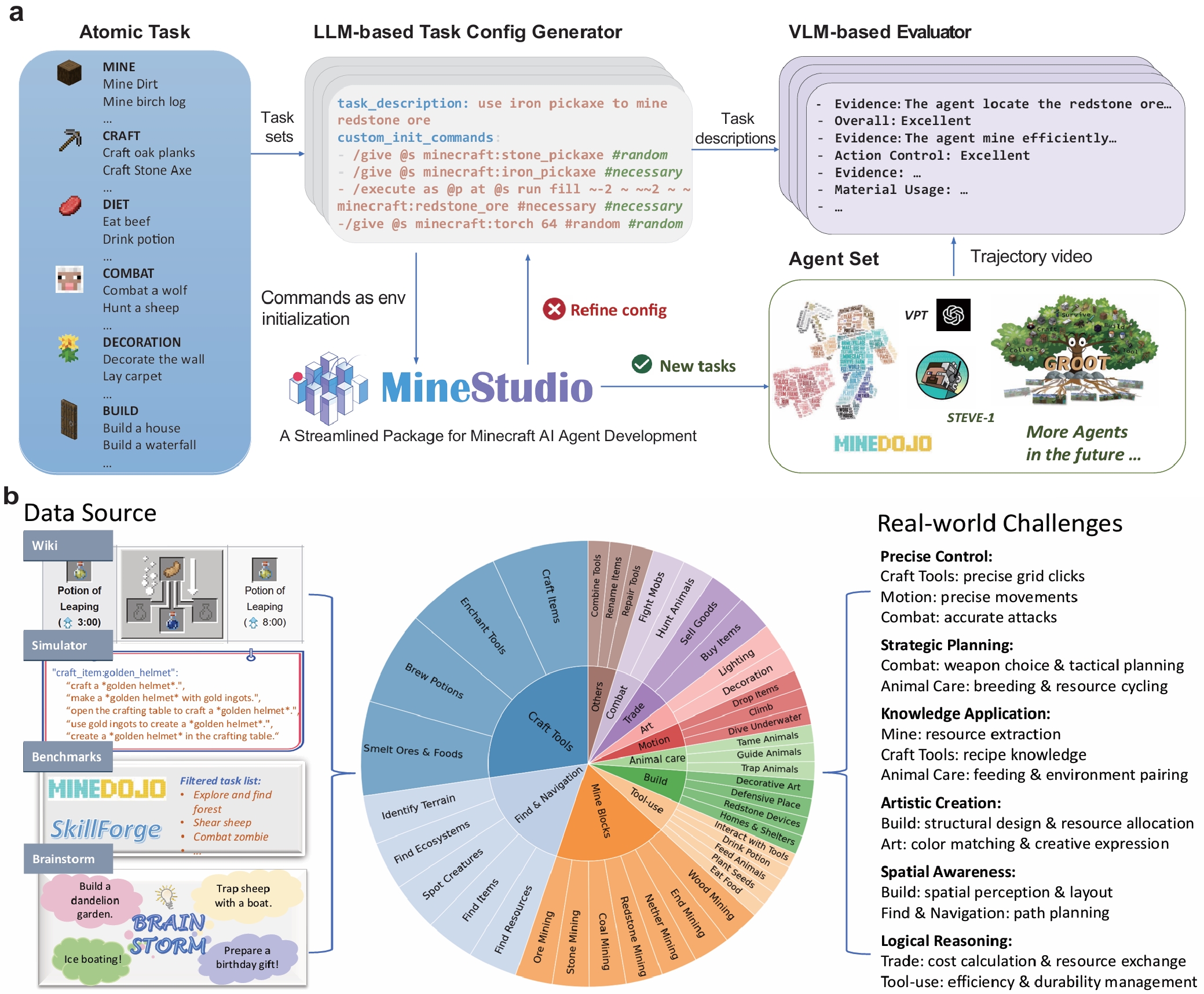

Developing AI agents capable of interacting with open-world environments to solve diverse tasks is a compelling challenge. However, evaluating such open-ended agents remains difficult, with current benchmarks facing scalability limitations. To address this, we introduce Minecraft Universe (MCU), a comprehensive evaluation framework set within the open-world video game Minecraft. MCU incorporates three key components: (1) an expanding collection of 3,452 composable atomic tasks that encompasses 11 major categories and 41 subcategories of challenges; (2) a task composition mechanism capable of generating infinite diverse tasks with varying difficulty; and (3) a general evaluation framework that achieves 91.5% alignment with human ratings for open-ended task assessment. Empirical results reveal that even state-of-the-art foundation agents struggle with the increasing diversity and complexity of tasks. These findings highlight the necessity of MCU as a robust benchmark to drive progress in AI agent development within open-ended environments.

Citation

@inproceedings{zheng2025mcu,

title={MCU: An Evaluation Framework for Open-Ended Game Agents},

author={Zheng, Xinyue and Lin, Haowei and He, Kaichen and Wang, Zihao and Zheng, Zilong and Liang, Yitao},

booktitle = {Proceedings of the 42nd International Conference on Machine Learning},

year={2025}

}