RuleReasoner: Reinforced Rule-based Reasoning via Domain-aware Dynamic Sampling

ICLR · 2026 · arXiv: arxiv.org/abs/2506.08672

Abstract

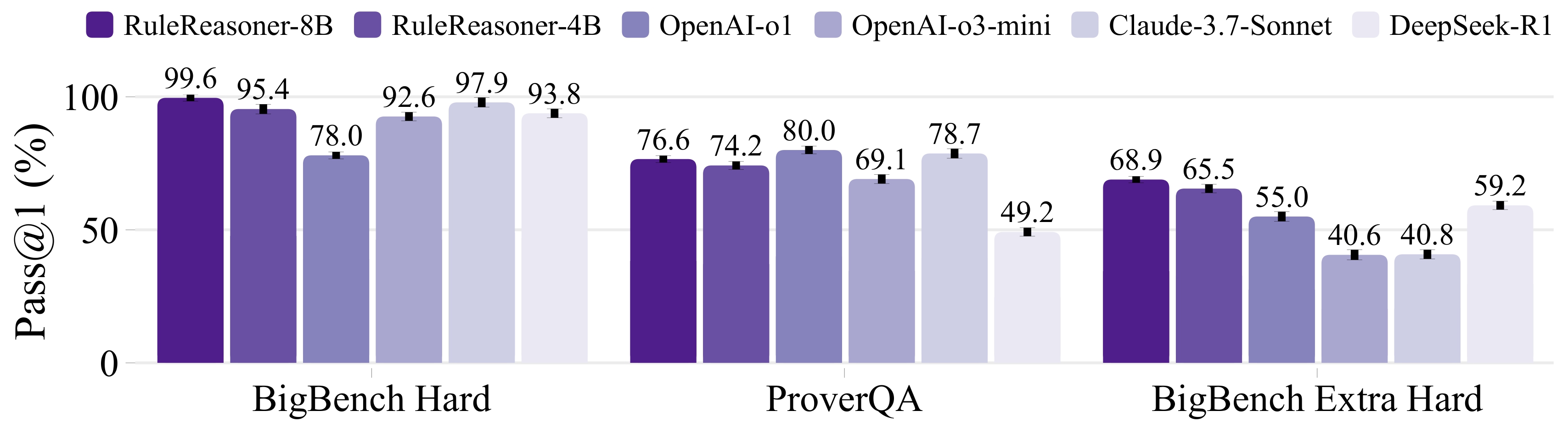

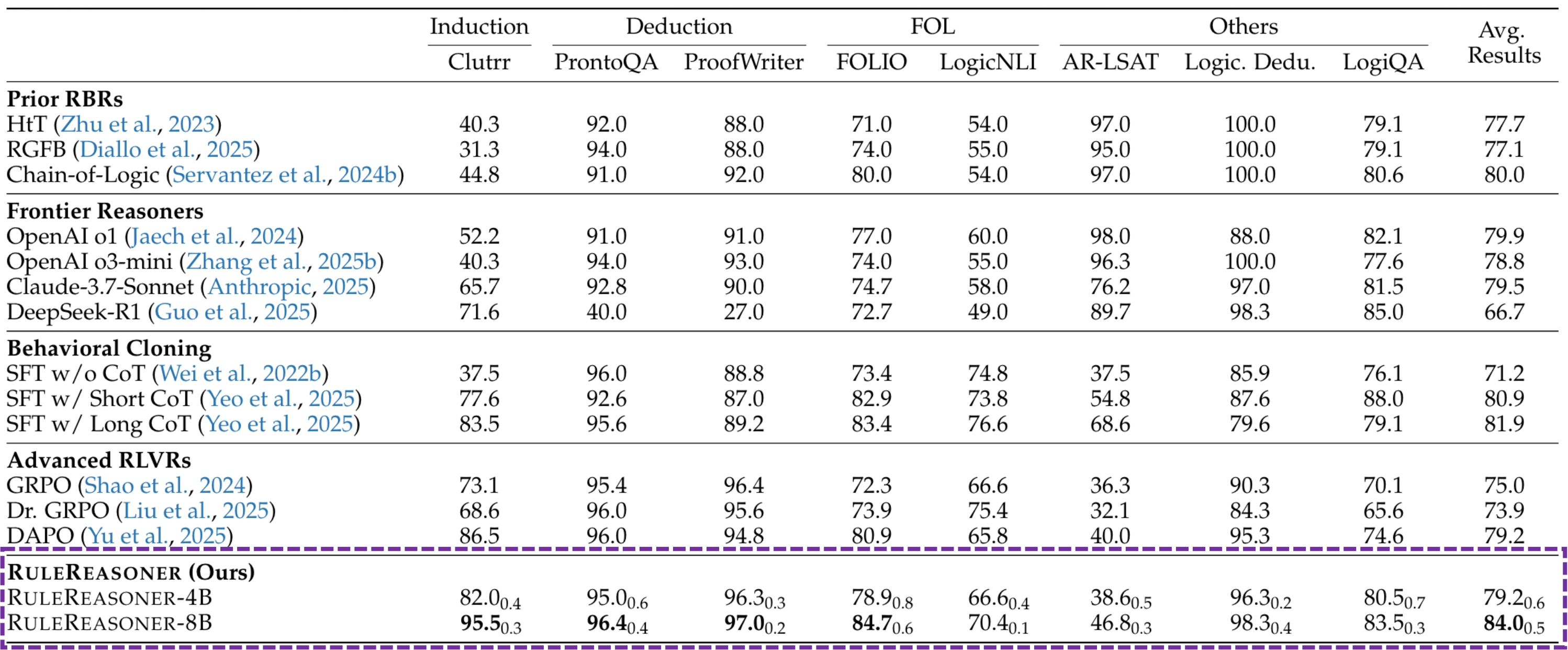

Rule-based reasoning has been acknowledged as one of the fundamental problems in reasoning, while deviations in rule formats, types, and complexity in real-world applications pose severe challenges. Recent studies have shown that large reasoning models (LRMs) have remarkable reasoning capabilities, and their performance is substantially enhanced by reinforcement learning (RL). However, it remains an open question whether small reasoning models (SRMs) can learn rule-based reasoning effectively with robust generalization across diverse tasks and domains. To address this, we introduce Reinforced Rule-based Reasoning, a.k.a. RuleReasoner, a simple yet effective method to conduct rule-based reasoning via a wide collection of curated tasks and a novel domain-aware dynamic sampling approach. Specifically, RuleReasoner resamples each training batch by updating the sampling weights of different domains based on historical rewards. This facilitates domain augmentation and flexible online learning schedules for RL, obviating the need for pre-hoc human-engineered mix-training recipes used in existing methods. Empirical evaluations on in-distribution (ID) and out-of-distribution (OOD) benchmarks reveal that RuleReasoner outperforms frontier LRMs by a significant margin (Δ4.1% average points on eight ID tasks and Δ10.4% average points on three OOD tasks over OpenAI-o1). Notably, our approach also exhibits higher computational efficiency compared to prior dynamic sampling methods for RL.

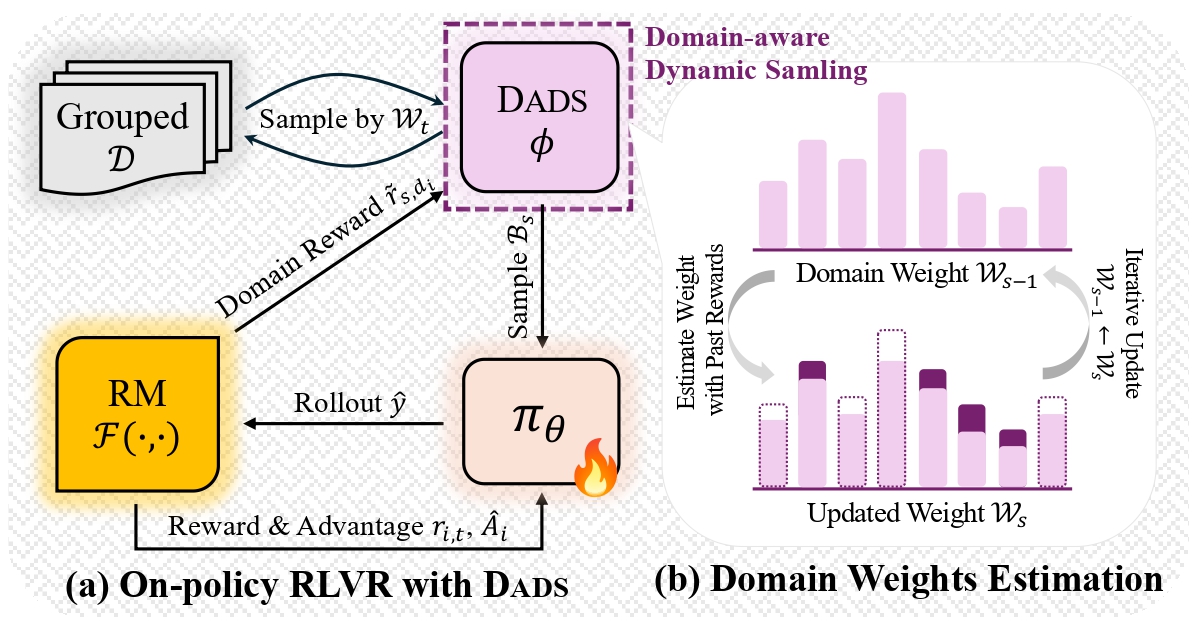

Domain-aware Policy Optimization with Dynamic Sampling

We propose Domain-aware Dynamic Sampling (DADS), a simple yet effective sampling method for RLVR, aiming to improve the performance of a policy model $\pi_\theta$ for solving multi-domain rule-based reasoning.

DADS dynamically adjusts the probability of sampling data from different domains based on their historical rewards. By prioritizing domains that yield lower verifiable rewards or those lagging behind a target reward, DADS enhances sample efficiency to re-sample the training batch $\mathcal{B}_s$ and mitigates the domain imbalance issue, leading to faster and more stable learning of policies that satisfy reward specifications. We instantiate RuleReasoner with the gradient policy algorithm of GRPO variant in this work to demonstrate its effectiveness and efficiency.

Empirical Results

Citation

@inproceedings{liu2025rulereasoner,

title={RuleReasoner: Reinforced Rule-based Reasoning via Domain-aware Dynamic Sampling},

author={Yang Liu and Jiaqi Li and Zilong Zheng},

year={2026},

booktitle={The Fourteenth International Conference on Learning Representations},

url={https://openreview.net/forum?id=MQV4TJyqnb}

}